The Unix Shell

Opening

Nelle Nemo, a marine biologist, has just returned from a six-month survey of the North Pacific Gyre, where she has been sampling gelatinous marine life in the Great Pacific Garbage Patch. She has 1520 samples in all, and now needs to:

- Run each sample through an assay machine that will measure the relative abundance of 300 different proteins. The machine's output for a single sample is a file with one line for each protein.

-

Calculate statistics for each of the proteins separately

using a program her supervisor wrote called

goostat. -

Compare the statistics for each protein with corresponding statistics for each other protein

using a program one of the other graduate students wrote called

goodiff. - Write up. Her supervisor would really like her to do this by the end of the month so that her paper can appear in an upcoming special issue of Aquatic Goo Letters.

It takes about half an hour for the assay machine to process each sample. The good news is, it only takes two minutes to set each one up. Since her lab has eight assay machines that she can use in parallel, this step will "only" take about two weeks.

The bad news is,

if she has to run goostat and goodiff by hand,

she'll have to enter filenames and click "OK" roughly 3002 times

(300 runs of goostat, plus 300×299 runs of goodiff).

At 30 seconds each,

that will 750 hours, or 18 weeks.

Not only would she miss her paper deadline,

the chances of her getting all 90,000 commands right are approximately zero.

This chapter is about what she should do instead. More specifically, it's about how she can use a command shell to automate the repetitive steps in her processing pipeline, so that her computer can work 24 hours a day while she writes her paper. As a bonus, once she has put a processing pipeline together, she will be able to use it again whenever she collects more data.

What and Why

Objectives

- Draw a diagram to show how the shell relates to the keyboard, the screen, the operating system, and users' programs.

- Explain when and why command-line interfaces should be used instead of graphical interfaces.

Duration: 10 minutes.

Lesson

At a high level, computers do four things:

- run programs;

- store data;

- communicate with each other; and

- interact with us.

They can do the last of these in many different ways, including direct brain-computer links and speech interfaces. Since these are still in their infancy, most of us use windows, icons, mice, and pointers. These technologies didn't become widespread until the 1980s, but their roots go back to Doug Engelbart's work in the 1960s, which you can see in what has been called "The Mother of All Demos".

Going back even further, the only way to interact with early computers was to rewire them. But in between, from the 1950s to the 1980s, most people used a technology based on the old-fashioned typewriter. That technology is still widely used today, and is the subject of this chapter.

When I say "typewriter", I actually mean a line printer connected to a keyboard (Figure 1). These devices only allowed input and output of the letters, numbers, and punctuation found on a standard keyboard, so programming languages and interfaces had to be designed around that constraint—though if you had enough time on your hands, you could draw simple pictures using just those characters (Figure 2).

,-. __

,' `---.___.---' `.

,' ,- `-._

,' / \

,\/ / \\

)`._)>) | \\

`>,' _ \ / ||

) \ | | | |\\

. , / \ | `. | | ))

\`. \`-' )-| `. | /((

\ `-` .` _/ \ _ )`-.___.--\ / `'

`._ ,' `j`.__/ `. \

/ , ,' \ /` \ /

\__ / _) ( _) (

`--' /____\ /____\

Today, this kind of interface is called a command-line user interface, or CLUI, to distinguish it from the graphical user interface, or GUI, that most of us now use. The heart of a CLUI is a read-evaluate-print loop, or REPL: when the user types a command, the computer reads it, executes it, and prints its output. (In the case of old teletype terminals, it literally printed the output onto paper, a line at a time.) The user then types another command, and so on until the user logs off.

This description makes it sound as though the user sends commands directly to the computer, and the computer sends output directly to the user. In fact, there is usually a program in between called a command shell (Figure 3). What the user types goes into the shell; it figures out what commands to run and orders the computer to execute them.

A shell is a program like any other. What's special about it is that its job is to run other programs, rather than to do calculations itself. The most popular Unix shell is Bash, the Bourne Again SHell (so-called because it's derived from a shell written by Stephen Bourne—this is what passes for wit among programmers). Bash is the default shell on most modern implementations of Unix, and in most packages that provide Unix-like tools for Windows, such as Cygwin.

Using Bash, or any other shell, sometimes feels more like programming that like using a mouse. Commands are terse (often only a couple of characters long), their names are frequently cryptic, and their output is lines of text rather than something visual like a graph. On the other hand, the shell allows us to combine existing tools in powerful ways with only a few keystrokes, and to set up pipelines to handle large volumes of data automatically. In addition, the command line is often the easiest way to interact with remote machines. As clusters and cloud computing become more popular for scientific data crunching, being able to drive them is becoming a necessary skill.

Summary

- A shell is a program whose primary purpose is to read commands and run other programs.

- The shell's main advantages are its high action-to-keystroke ratio, its support for automating repetitive tasks, and that it can be used to access networked machines.

- The shell's main disadvantages are its primarily textual nature and how cryptic its commands and operation can be.

Files and Directories

Objectives

- Explain the similarities and differences between a file and a directory.

- Translate an absolute path into a relative path and vice versa.

- Construct absolute and relative paths that identify specific files and directories.

- Explain the steps in the shell's read-run-print cycle.

- Identify the actual command, flags, and filenames in a command-line call.

- Demonstrate the use of tab completion, and explain its advantages.

Duration: 15 minutes (longer if people have trouble getting an editor to work).

Lesson

The part of the operating system responsible for managing files and directories is called the file system. It organizes our data into files, which hold information, and directories (also called "folders"), which hold files or other directories.

Several commands are frequently used to create, inspect, rename, and delete files and directories. To start exploring them, let's log in to the computer by typing our user ID and password. Most systems will print stars to obscure the password, or nothing at all, in case some evildoer is shoulder surfing behind us.

login: vlad password: ******** $

Once we have logged in we'll see a prompt,

which is how the shell tells us that it's waiting for input.

This is usually just a dollar sign,

but which may show extra information such as our user ID or the current time.

Type the command whoami,

then press the Enter key

(sometimes marked Return)

to send the command to the shell.

The command's output is the ID of the current user,

i.e.,

it shows us who the shell thinks we are:

$ whoami vlad $

More specifically,

when we type whoami

the shell:

- finds a program called

whoami, - runs it,

- waits for it to display its output, and

- displays a new prompt to tell us that it's ready for more commands.

Getting Around

Next,

let's find out where we are

by running a command called pwd

(which stands for "print working directory").

At any moment,

our current working directory

is our current default directory,

i.e., the directory that the computer assumes we want to run commands in

unless we explicitly specify something else.

Here,

the computer's response is /users/vlad,

which is Vlad's home directory:

$ pwd /users/vlad $

Alphabet Soup

If the command to find out who we are is whoami,

the command to find out where we are ought to be called whereami,

so why is it pwd instead?

The usual answer is that in the early 1970s,

when Unix was first being developed,

every keystroke counted:

the devices of the day were slow,

and backspacing on a teletype was so painful

that cutting the number of keystrokes

in order to cut the number of typing mistakes

was actually a win for usability.

The reality is that commands were added to Unix one by one,

without any master plan,

by people who were immersed in its jargon.

The result is as inconsistent as the roolz uv Inglish speling,

but we're stuck with it now.

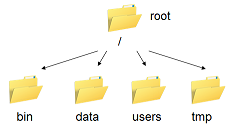

To understand what a "home directory" is,

let's have a look at how the file system as a whole is organized

(Figure 4).

At the top is the root directory

that holds everything else the computer is storing.

We refer to it using a slash character / on its own;

this is the leading slash in /users/vlad.

Inside that directory (or underneath it, if you're drawing a tree) are several other directories:

bin (which is where some built-in programs are stored),

data (for miscellaneous data files),

users (where users' personal directories are located),

tmp (for temporary files that don't need to be stored long-term),

and so on.

We know that our current working directory /users/vlad is stored inside /users

because /users is the first part of its name.

Similarly,

we know that /users is stored inside the root directory / because its name begins with /.

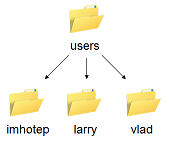

Underneath /users,

we find one directory for each user with an account on this machine

(Figure 5).

The Mummy's files are stored in /users/imhotep,

Wolfman's in /users/larry,

and ours in /users/vlad,

which is why vlad is the last part of the directory's name.

Notice, by the way, that there are two meanings for the / character.

When it appears at the front of a file or directory name, it refers to the root directory.

When it appears inside a name, it's just a separator.

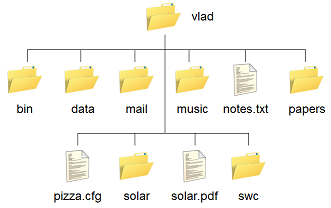

Let's see what's in Vlad's home directory by running ls,

which stands for "listing":

$ ls bin data mail music notes.txt papers pizza.cfg solar solar.pdf swc $

ls prints the names of the files and directories in the current directory in alphabetical order,

arranged neatly into columns.

To make its output more comprehensible,

we can give it the flag -F by typing ls -F.

This tells ls to add a trailing / to the names of directories:

$ ls -F bin/ data/ mail/ music/ notes.txt papers/ pizza.cfg solar/ solar.pdf swc/ $

As you can see,

/users/vlad contains seven sub-directories

(Figure 6).

The names that don't have trailing slashes—notes.txt, pizza.cfg, and solar.pdf—are

plain old files.

What's In A Name?

You may have noticed that all of Vlad's files' names are "something dot something".

This is just a convention:

we can call a file mythesis or almost anything else we want.

However,

most people use two-part names most of the time

to help them (and their programs) tell different kinds of files apart.

The second part of such a name is called the filename extension,

and indicates what type of data the file holds:

.txt signals a plain text file,

.pdf indicates a PDF document,

.cfg is a configuration file full of parameters for some program or other,

and so on.

It's important to remember that this is just a convention.

Files contain bytes:

it's up to us and our programs to interpret those bytes according to the rules for PDF documents, images, and so on.

For example,

naming a PNG image of a whale as whale.mp3

doesn't somehow magically turn it into a recording of whalesong.

Now let's take a look at what's in Vlad's data directory

by running the command ls -F data.

The second parameter—the one without a leading dash—tells

ls that we want a listing of something other than our current working directory:

$ ls -F data amino_acids.txt elements/ morse.txt pdb/ planets.txt sunspot.txt $

The output shows us that there are four text files and two sub-sub-directories. Organizing things hierarchically in this way is a good way to keep track of our work: it's possible to put hundreds of files in our home directory, just as it's possible to pile hundreds of printed papers on our desk, but in the long run it's a self-defeating strategy.

Notice,

by the way

that we spelled the directory name data.

It doesn't have a trailing slash:

that's added to directory names by ls

when we use the -F flag

to help us tell things apart.

And it doesn't begin with a slash

because it's a relative path,

i.e., it tells ls how to find something from where we are,

rather than from the root of the file system

(Figure 7).

If we run ls -F /data

(with a leading slash)

we get a different answer,

because /data is an absolute path:

$ ls -F /data access.log backup/ hardware.cfg network.cfg $

The leading / tells the computer to follow the path from the root of the filesystem,

so it always refers to exactly one directory,

no matter where we are when we run the command.

What if we want to change our current working directory?

Before we do this,

pwd shows us that we're in /users/vlad,

and ls without any parameters shows us that directory's contents:

$ pwd /users/vlad $ ls bin/ data/ mail/ music/ notes.txt papers/ pizza.cfg solar/ solar.pdf swc/ $

We can use cd followed by a directory name to change our working directory.

cd stands for "change directory",

which is a bit misleading:

the command doesn't change the directory,

it changes the shell's idea of what directory we are in.

$ cd data

$

cd doesn't print anything,

but if we run pwd after it,

we can see that we are now in /users/vlad/data.

If we run ls without parameters now,

it lists the contents of /users/vlad/data,

because that's where we now are:

$ pwd /users/vlad/data $ ls amino_acids.txt elements/ morse.txt pdb/ planets.txt sunspot.txt $

OK, we can go down the directory tree: how do we go up? We could use an absolute path:

$ cd /users/vlad

$

but it's almost always simpler to use cd ..

to go up one level:

$ pwd /users/vlad/data $ cd ..

.. is a special directory name meaning "the directory containing this one",

or,

more succinctly,

the parent of the current directory.

No Output = OK

In general, shell programs do not output anything if no errors occurred. The principle behind this is "If there is nothing to report, shut up.", i. e. if there is output, either you specifically requested the program to do so or something bad happened.

This does not apply to programs like ls or

pwd, since the purpose of such programs is

to output something.

Sure enough,

if we run pwd after running cd ..,

we're back in /users/vlad:

$ pwd /users/vlad $

The special directory .. doesn't usually show up when we run ls.

If we want to display it,

we can give ls the -a flag:

$ ls -F -a ./ ../ bin/ data/ mail/ music/ notes.txt papers/ pizza.cfg solar/ solar.pdf swc/

-a stands for "show all";

it forces ls to show us file and directory names that begin with .,

such as ..

(which, if we're in /users/vlad, refers to the /users directory).

As you can see,

it also displays another special directory that's just called .,

which means "the current working directory".

It may seem redundant to have a name for it,

but we'll see some uses for it soon.

Orthogonality

The special names . and .. don't belong to ls;

they are interpreted the same way by every program.

For example,

if we are in /users/vlad/data,

the command ls ..

will give us a listing of /users/vlad.

Programmers call this orthogonality:

the meanings of the parts are the same no matter how they're combined.

Orthogonal systems tend to be easier for people to learn

because there are fewer special cases and exceptions to keep track of.

Everything we have seen so far works on Unix and its descendents,

such as Linux and Mac OS X.

Things are a bit different on Windows.

A typical directory path on a Windows 7 machine might be C:\Users\vlad.

The first part, C:, is a drive letter

that identifies which disk we're talking about.

This notation dates back to the days of floppy drives;

today,

different "drives" are usually different filesystems on the network.

Instead of a forward slash,

Windows uses a backslash to separate the names in a path.

This causes headaches because Unix uses backslash for input of special characters.

For example,

if we want to put a space in a filename on Unix,

we would write the filename as my\ results.txt.

Please don't ever do this, though:

if you put spaces,

question marks,

and other special characters in filenames on Unix,

you can confuse the shell for reasons that we'll see shortly.

Finally,

Windows filenames and directory names are case insensitive:

upper and lower case letters mean the same thing.

This means that the path name C:\Users\vlad could be spelled

c:\users\VLAD,

C:\Users\Vlad,

and so on.

Some people argue that this is more natural:

after all, "VLAD" in all upper case and "Vlad" spelled normally refer to the same person.

However,

it causes headaches for programmers,

and can be difficult for people to understand

if their first language doesn't use a cased alphabet.

For Cygwin Users

Cygwin tries to make Windows paths look more like Unix paths

by allowing us to use a forward slash instead of a backslash as a separator.

It also allows us to refer to the C drive as /cygdrive/c/ instead of as C:.

(The latter usually works too, but not always.)

Paths are still case insensitive,

though,

which means that if you try to put files called backup.txt (in all lower case)

and Backup.txt (with a capital 'B') into the same directory,

the second will overwrite the first.

Cygwin does something else that frequently causes confusion.

By default,

it interprets a path like /home/vlad to mean C:\cygwin\home\vlad,

i.e.,

it acts as if C:\cygwin was the root of the filesystem.

This is sometimes helpful,

but if you are using an editor like Notepad,

and want to save a file in what Cygwin thinks of as your home directory,

you need to keep this translation in mind.

Nelle's Pipeline: Organizing Files

Knowing just this much about files and directories,

Nelle is ready to organize the files that the protein assay machine will create.

First,

she creates a directory called north-pacific-gyre

(to remind herself where the data came from).

Inside that, she creates a directory called 2012-07-03,

which is the date she started processing the samples.

She used to use names like conference-paper and revised-results,

but she found them hard to understand after a couple of years.

(The final straw was when she found herself creating a directory called

revised-revised-results-3.)

Each of her physical samples is labelled

according to her lab's convention

with a unique ten-character ID,

such as "NENE01729A".

This is what she used in her collection log to record the location,

time, depth, and other characteristics of the sample,

so she decides to use it as part of each data file's name.

Since the assay machine's output is plain text,

she will call her files NENE01729A.txt,

NENE01812A.txt,

and so on.

All 1520 files will go into the same directory.

If she is in her home directory, Nelle can see what files she has using the command:

$ ls north-pacific-gyre/2012-07-03/

Since this is a lot to type, she can take advantage of Bash's command completion. If she types:

$ ls no

and then presses tab, Bash will automatically complete the directory name for her:

$ ls north-pacific-gyre/

If she presses tab again,

Bash will add 2012-07-03/ to the command,

since it's the only possible completion.

Pressing tab again does nothing,

since there are 1520 possibilities;

pressing tab twice brings up a list of all the files,

and so on.

This is called tab completion,

and we will see it in many other tools as we go on.

Summary

- The file system is responsible for managing information on disk.

- Information is stored in files, which are stored in directories (folders).

- Directories can also store other directories, which forms a directory tree.

/on its own is the root directory of the whole filesystem.- A relative path specifies a location starting from the current location.

- An absolute path specifies a location from the root of the filesystem.

- Directory names in a path are separated with '/' on Unix, but '\' on Windows.

- '..' means "the directory above the current one"; '.' on its own means "the current directory".

- Most files' names are

something.extension; the extension isn't required, and doesn't guarantee anything, but is normally used to indicate the type of data in the file. cd pathchanges the current working directory.ls pathprints a listing of a specific file or directory;lson its own lists the current working directory.pwdprints the user's current working directory (current default location in the filesystem).whoamishows the user's current identity.- Most commands take options (flags) which begin with a '-'.

Challenges

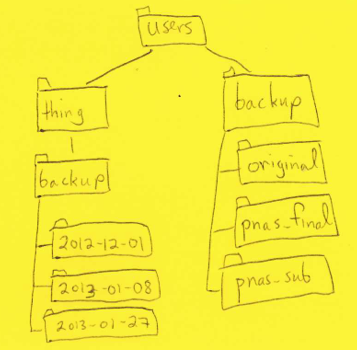

Please refer to Figure 8 when answering the challenges below.

If

pwddisplays/users/thing, what willls ../backupdisplay?../backup: No such file or directory2012-12-01 2013-01-08 2013-01-272012-12-01/ 2013-01-08/ 2013-01-27/original pnas_final pnas_sub

If

pwddisplays/users/fly, what command will displaythesis/ papers/ articles/ls pwdls -r -Fls -r -F /users/fly- Either #2 or #3 above, but not #1.

What does the command

cdwithout a directory name do?- It has no effect.

- It changes the working directory to

/. - It changes the working directory to the user's home directory.

- It is an error.

We said earlier that spaces in path names have to be marked with a leading backslash in order for the shell to interpret them properly. Why? What happens if we run a command like:

$ ls my\ thesis\ fileswithout the backslashes?

Creating Things

Objectives

- Create a directory hierarchy that matches a given diagram.

- Create files in that hierarchy using an editor or by copying and renaming existing files.

- Display the contents of a directory using the command line.

- Delete specified files and/or directories.

Duration: 10 minutes.

Lesson

We now know how to explore files and directories,

but how do we create them in the first place?

Let's go back to Vlad's home directory,

/users/vlad,

and use ls -F to see what it contains:

$ pwd /users/vlad $ ls -F bin/ data/ mail/ music/ notes.txt papers/ pizza.cfg solar/ solar.pdf swc/ $

Let's create a new directory called thesis

using the command mkdir thesis

(which has no output):

$ mkdir thesis

$

As you might (or might not) guess from its name,

mkdir means "make directory".

Since thesis is a relative path

(i.e., doesn't have a leading slash),

the new directory is made below the current one:

$ ls -F bin/ data/ mail/ music/ notes.txt papers/ pizza.cfg solar/ solar.pdf swc/ thesis/ $

However, there's nothing in it yet:

$ ls -F thesis

$

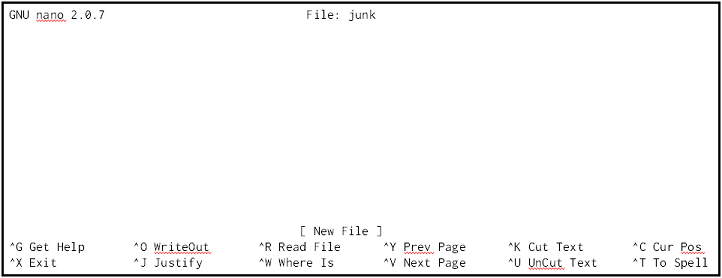

Let's change our working directory to thesis using cd,

then run an editor called Nano

to create a file called nano draft.txt:

$ cd thesis $ nano draft.txt

Nano is a very simple text editor that only a programmer could really love.

Figure 9 shows what it looks like when it runs:

the cursor is the blinking square in the upper left,

and the two lines across the bottom show us the available commands.

(By convention,

Unix documentation uses the caret ^ followed by a letter

to mean "control plus that letter",

so ^O means Control+O.)

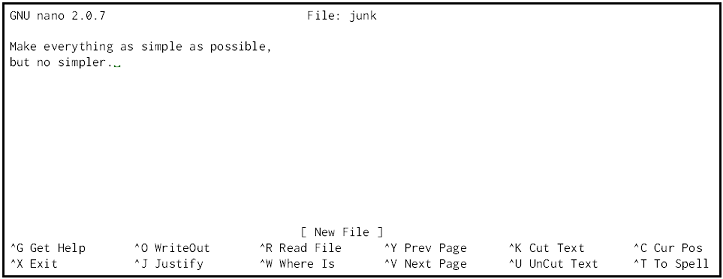

Let's type in a short quotation (Figure 10) then use Control-O to write our data to disk. Once our quotation is saved, we can use Control-X to quit the editor and return to the shell.

Which Editor?

When we say, "nano is a text editor,"

we really do mean "text":

it can only work with plain character data,

not tables,

images,

or any other human-friendly media.

We use it in examples because

almost anyone can drive it anywhere without training,

but please use something more powerful for real work.

On Unix systems (such as Linux and Mac OS X),

many programmers use Emacs

or Vim

(both of which are completely unintuitive, even by Unix standards),

or a graphical editor such as Gedit.

On Windows,

you may wish to use Notepad++.

No matter what editor you use, you will need to know where it searches for and saves files. If you start it from the shell, it will (probably) use your current working directory as its default location. If you use your computer's start menu, it may want to save files in your desktop or documents directory instead. You can change this by navigating to another directory the first time you "Save As..."

nano doesn't leave any output on the screen after it exits.

But ls now shows that we have created a file called draft.txt:

$ ls draft.txt $

If we just want to quickly create a file with no content, we

can use the command touch.

$ touch draft_empty.txt $ ls draft.txt draft_empty.txt $

The touch command has allowed us to create a file

without leaving the command line. As with text editors like

nano, touch will create the file in

your current working directory.

If we touch a file that already exists, the time of

last modification for the file is updated to the present time,

but the file is otherwise unaltered. We can demonstrate this by

running ls with the -l flag to give a

long listing of files in the

current directory which includes information about the date and

time of last modification.

$ ls -l -rw-r--r-- 1 username username 512 Oct 9 15:46 draft.txt -rw-r--r-- 1 username username 0 Oct 9 15:47 draft_empty.txt $ touch draft.txt $ ls -l -rw-r--r-- 1 username username 512 Oct 9 15:48 draft.txt -rw-r--r-- 1 username username 0 Oct 9 15:47 draft_empty.txt

Note how the modification time of draft.txt changes

after we touch it.

We can run ls with the -s flag

(for "size")

to show us how large draft.txt is:

$ ls -s 1 draft.txt 0 draft_empty.txt $

Unfortunately,

Unix reports sizes in disk blocks by default,

which might be the least helpful default imaginable.

If we add the -h flag,

ls switches to more human-friendly units:

$ ls -s -h 512 draft.txt 0 draft_empty.txt $

Here, 512 is the number of bytes in the draft.txt file.

This is more than we actually typed in because

the smallest unit of storage on the disk is typically a block of 512 bytes.

Let's start tidying up by running rm draft.txt:

$ rm draft.txt

$

This command removes files ("rm" is short for "remove").

If we run ls, only the draft_empty.txt

file remains:

$ ls draft_empty.txt $

Now we'll finish cleaning up by running rm draft_empty.txt:

$ rm draft_empty.txt

$

If we run ls again, its output is empty,

which tells us that both of our files are gone:

$ ls

$

Deleting Is Forever

Unix doesn't have a trash bin: when we delete files, they are unhooked from the file system so that their storage space on disk can be recycled. Tools for finding and recovering deleted files do exist, but there's no guarantee they'll work in any particular situation, since the computer may recycle the file's disk space right away.

Let's re-create draft.txt as an empty file,

and then move up one directory to /users/vlad using cd ..:

$ pwd /users/vlad/thesis $ touch draft.txt $ ls draft.txt $ cd .. $

If we try to remove the entire thesis directory using rm thesis,

we get an error message:

$ rm thesis rm: cannot remove `thesis': Is a directory $

This happens because rm only works on files, not directories.

The right command is rmdir, which is short for "remove directory":

It doesn't work yet either, though,

because the directory we're trying to remove isn't empty:

$ rmdir thesis rmdir: failed to remove `thesis': Directory not empty $

This little safety feature can save you a lot of grief,

particularly if you are a bad typist.

If we really want to get rid of thesis

we should first delete the file draft.txt:

$ rm thesis/draft.txt

$

The directory is now empty,

so rmdir can delete it:

$ rmdir thesis

$

With Great Power Comes Great Responsibility

Removing the files in a directory

just so that we can remove the directory

quickly becomes tedious.

Instead,

we can use rm with the -r flag

(which stands for "recursive"):

$ rm -r thesis

$

This removes everything in the directory,

then the directory itself.

If the directory contains sub-directories,

rm -r does the same thing to them,

and so on.

It's very handy,

but can do a lot of damage if used without care.

Let's create that directory and file one more time.

(Note that this time we're running touch with the path thesis/draft.txt,

rather than going into the thesis directory

and running touch on draft.txt there.)

$ pwd /users/vlad/thesis $ mkdir thesis $ touch thesis/draft.txt $ ls thesis draft.txt $

draft.txt isn't a particularly informative name,

so let's change the file's name using mv,

which is short for "move":

$ mv thesis/draft.txt thesis/quotes.txt

$

The first parameter tells mv what we're "moving",

while the second is where it's to go.

In this case,

we're moving thesis/draft.txt to thesis/quotes.txt,

which has the same effect as renaming the file.

Sure enough,

ls shows us that thesis now contains one file called quotes.txt:

$ ls thesis quotes.txt $

Just for the sake of inconsistency,

mv also works on directories—there

is no separate mvdir command.

Let's move quotes.txt into the current working directory.

We use mv once again,

but this time

we'll just use the name of a directory as the second parameter

to tell mv that we want to keep the filename,

but put the file somewhere new.

(This is why the command is called "move".)

In this case,

the directory name we use is

the special directory name . that we mentioned earlier):

$ mv thesis/quotes.txt .

$

The effect is to move the file from the directory it was in

to the current directory.

ls now shows us that thesis is empty,

and that quotes.txt is in our current directory:

$ ls thesis $ ls quotes.txt quotes.txt $

(Notice that ls with a filename or directory name as an parameter

only lists that file or directory.)

The cp command works very much like mv,

except it copies a file instead of moving it.

We can check that it did the right thing

using ls with two paths as parameters—like most Unix commands,

ls can be given thousands of paths at once:

$ cp quotes.txt thesis/quotations.txt $ ls quotes.txt thesis/quotations.txt quotes.txt thesis/quotations.txt $

To prove that we made a copy,

let's delete the quotes.txt file in the current directory,

and then run that same ls again.

This time,

it tells us that it can't find quotes.txt in the current directory,

but it does find the copy in thesis that we didn't delete:

$ ls quotes.txt thesis/quotations.txt ls: cannot access quotes.txt: No such file or directory thesis/quotations.txt $

Another Useful Abbreviation

The shell interprets the character ~ (tilde) at the start of a path

to mean "the current user's home directory".

For example,

if Vlad's home directory is /home/vlad,

then ~/data is equivalent to /home/vlad/data.

This only works if it is the first character in the path:

/~ is not the user's home directory,

and here/there/~/elsewhere is not /home/vlad/elsewhere.

Summary

- Unix documentation uses '^A' to mean "control-A".

- The shell does not have a trash bin: once something is deleted, it's really gone.

mkdir pathcreates a new directory.cp old newcopies a file.mv old newmoves (renames) a file or directory.nanois a very simple text editor—please use something else for real work.touch pathcreates an empty file if it doesn't already exist.rm pathremoves (deletes) a file.rmdir pathremoves (deletes) an empty directory.

Challenges

What is the output of the closing

lscommand in the sequence shown below?$ pwd /home/thing/data $ ls proteins.dat $ mkdir recombine $ mv proteins.dat recombine $ cp recombine/proteins.dat ../proteins-saved.dat $ ls

Suppose that:

$ ls -F analyzed/ fructose.dat raw/ sucrose.dat

What command(s) could you run so that the commands below will produce the output shown?

$ ls analyzed raw $ ls analyzed fructose.dat sucrose.dat

What does

cpdo when given several filenames and a directory name, as in:$ mkdir backup $ cp thesis/citations.txt thesis/quotations.txt backup

What does

cpdo when given three or more filenames, as in:$ ls -F intro.txt methods.txt survey.txt $ cp intro.txt methods.txt survey.txt

Why do you think

cp's behavior is different frommv's?The command

ls -Rlists the contents of directories recursively, i.e., lists their sub-directories, sub-sub-directories, and so on in alphabetical order at each level. The commandls -tlists things by time of last change, with most recently changed files or directories first. In what order doesls -R -tdisplay things?

Wildcards

Objectives

- Write wildcard expressions that select certain subsets of the files in one or more directories.

- Write wildcard expressions to create multiple files.

- Explain when wildcards are expanded.

- Understand the difference between different wildcard characters.

- Explain what globbing is.

- Understand how to escape special characters.

Duration: 15-20 minutes.

Lesson

At this point we have a basic toolkit which allows us to do things in the shell that you can normally do in Windows, Mac OS, or GNU/Linux with your mouse. For example, we can create, delete and move individual files. We will now learn about wildcards, which will allow us to expand our toolkit so that we can perform operations on multiple files with a single command. We'll start in an empty directory and create four files:

$ touch file1.txt file2.txt otherfile1.txt otherfile2.txt $ ls file1.txt file2.txt otherfile1.txt otherfile2.txt $

If we want to see all filenames that start with "file", we can run the

command ls file*:

$ ls file* file1.txt file2.txt $

Asterisk (*) Wildcard

The asterisk wildcard * in the expression

file* matches zero

or more characters of any kind. Thus the expression file*

matches anything that starts with "file". On the other hand,

*file* would match anything containing the word "file"

at all, such as file1.txt,

file2.txt, otherfile1.txt, and

otherfile2.txt.

When the shell sees a wildcard, it expands it to create a list of

matching filenames before passing those names to whatever

command is being run. This means that when you execute

ls file*, the shell first expands it to

ls file1.txt file2.txt in the above example,

and then runs that command. This process of expansion is

called globbing.

Therefore commands like ls and

rm never see the wildcard characters, just what

those wildcards matched. This is another example of orthogonal

design.

There are four other basic wildcard characters we will explore:

- The question mark,

?, matches any single character, sotext?.txtwould matchtext1.txtortextz.txt, but nottext_1.txt. - The square brackets,

[and], which can be used to specify a set of possible characters. For example,text[135].txtwould matchtext1.txt,text3.txt, andtext5.txt. You can also specify a natural sequence of characters using a dash. For example,text[1-6].txtwould matchtext1.txt,text2.txt, ...,text6.txt. - The exclamation mark,

!, which can be used with square brackets to negate characters. For example,text[!135].txtwould match anything of the formtext?.txtexcept fortext1.txt,text3.txt, andtext5.txt. (Note that the exclamation mark has to be used right after the first square bracket as in the example. An exclamation mark outside of square brackets is not a wildcard, but serves another function of accessing previous commands from your history, as we will later see.) - The curly braces,

{}, are similar to the square brackets, except that they take a list of items separated by commas, and that they always expand all elements of the list, whether or not the corresponding filenames exist. For example,text{1,5}.txtwould expand totext1.txt text5.txtwhether or nottext1.txtandtext5.txtactually exist. The elements of the list need not be single characters, sotext{12,5}.txtexpands totext12.txt text5.txt. Natural sequences can be specified using.., i.e.text{1..5}.txtwould expand totext1.txt text2.txt text3.txt text4.txt text5.txt.

We can use any number of wildcards at a time:

for example,

p*.p?* matches anything that starts with a 'p'

and ends with '.', 'p', and at least one more character

(since the '?' has to match one character,

and the final '*' can match any number of characters).

Thus,

p*.p?* would match

preferred.practice,

and even p.pi

(since the first '*' can match no characters at all),

but not quality.practice (doesn't start with 'p')

or preferred.p (there isn't at least one character after the '.p').

Let's see some more examples of wildcards in action. First we will remove everything in the current directory:

$ rm * $ ls $

Now that our working directory is empty, let's create some empty files using the touch command and the curly braces:

$ touch file{4,6,7}.txt file_{a..e}.txt

$

The curly braces expand the second token file{4,6,7}.txt

into three

separate file names, and the third token file_{a..e}.txt

expands into five separate

file names. So, now if we list files using the asterisk wildcard,

we get:

$ ls file* file4.txt file7.txt file_b.txt file_d.txt file6.txt file_a.txt file_c.txt file_e.txt $

We have matched all files in our current directory that begin

with file. Now, let us list a subset of those files using

the question mark:

$ ls file?.txt file4.txt file6.txt file7.txt $

Note how this glob pattern matched only the numbered files. That's

because only the numbered files have a single character

between file and .txt. The lettered files

have two characters between file and .txt:

$ ls file??.txt file_a.txt file_b.txt file_c.txt file_d.txt file_e.txt $

Let's try out using the ! wildcard with square brackets.

The pattern file[!46]* would match everything beginning with

"file", unless the next character is a 4 or a 6. Thus,

continuing with our example, if we do rm file[!46]*, we

will delete everything except file4.txt and

file6.txt:

$ ls file4.txt file7.txt file_b.txt file_d.txt file6.txt file_a.txt file_c.txt file_e.txt $ rm file[!46]* $ ls file4.txt file6.txt $

It's also possible to combine and nest wildcards. For example,

file{4,_?}.txt would match file4.txt

and file_?.txt, where ? matches any

single character. To demonstrate this, let's re-create all of

our files:

$ touch file{4,6,7}.txt file_{a..e}.txt $ ls file4.txt file7.txt file_b.txt file_d.txt file6.txt file_a.txt file_c.txt file_e.txt $

Now let's run ls file{4,_?}.txt:

$ ls file{4,_?}.txt file4.txt file_b.txt file_d.txt file_a.txt file_c.txt file_e.txt $

Finally, it is important to understand what happens if a

wildcard expression doesn't match any files. In this case,

the wildcard characters are interpreted literally. For

example, if we were to enter ls file[AB].txt,

the system will first try to find fileA.txt or

fileB.txt. If it found either of those, then

the wildcard expression would be expanded appropriately,

but when it fails to find either of

them, the expression remains unevaluated as

file[AB].txt, and is then passed to ls.

At this point,

ls will try to find a single file that is

actually named file[AB].txt.

We can see that this is the case if we try

ls file[AB].txt:

$ ls file[AB].txt ls: file[AB].txt: No such file or directory $

The system tells us that file[AB].txt doesn't exist

since this was the file it was looking for after the wildcard

expression found no matches. Still not

convinced? Let's actually create a file named file[AB].txt.

To do this, we need some way of telling the computer that we

actually want the [ and ] characters to be in the filename, and

that we don't mean them as wildcards.

Escaping Special Characters

Wildcard characters, like *, [],

and {} are all special characters in bash.

Another example of a special character which we have already

encountered, and that is not

a wildcard character, is the forward slash /,

which is used to delimit directory levels. It is possible to

escape special characters, i.e. turn off their

special meanings.

We can escape a single character by preceding it with a

backslash, \. This tells the shell to interpret

that character literally.

For example, writing \/

tells bash to interpret the forward slash literally. We can escape

more than one character by enclosing a set of characters in

either single or double quotes. Single quotes preserve the

literal value of each character enclosed. Double quotes

preserve the literal value of each character enclosed with the

exception of $, `, and

\, which all retain their special meanings.

Therefore, inside double quotes,

backslashes \ preceding any of these three special

characters will be removed. Otherwise, the backslash will remain.

For the example above of creating a file named

file[AB].txt, one can do any of the following:

touch "file[AB].txt",

touch 'file[AB].txt',

touch file\[AB\].txt, or

touch "file\[AB\].txt".

Now we can create the file file[AB].txt:

$ touch "file[AB].txt" $ ls file4.txt file7.txt file_a.txt file_c.txt file_e.txt file6.txt file[AB].txt file_b.txt file_d.txt $

If we do ls file[AB].txt, it will find the

file we've just created:

$ ls file[AB].txt file[AB].txt $

But if we create a file named fileA.txt,

this will be found first by the wildcard expansion,

since it first looks for fileA.txt and

fileB.txt:

$ touch fileA.txt $ ls file[AB].txt fileA.txt $

If we really do want to look for something called

file[AB].txt, and not fileA.txt

or fileB.txt, then we have to escape the special

characters "[" and "]". We can do this:

$ ls file\[AB\].txt file[AB].txt $

or this:

$ ls "file[AB].txt" file[AB].txt $

Single quotes would work as well in this case.

Summary

- Use wildcards to match filenames.

*is a wildcard pattern that matches zero or more characters in a pathname.?is a wildcard pattern that matches any single character.[]and{}allow you to match specified sets of characters in different ways.[!characters]matches everything other than the characters specified.- Wildcards are useful when performing actions on more than one file at a time.

- The shell matches wildcards before running commands.

- We can use wildcard patterns for nearly any command (cp, mv, rm, etc.).

- Globbing is the process through which wildcard patterns are matched to filenames.

- You can use multiple wildcard expressions in a single glob.

Challenges

In the lesson we started in an empty directory and created several files using the command:

$ touch file{4,6,7}.txt file{a..e}.txt $What files, if any, would have been created if we had instead used square brackets? I.e.

$ touch file[467].txt file[a-e].txt $We also discussed the effect of running

ls file[AB].txtwhen neither the filefileA.txt, nor the filefileB.txt, nor the filefile[AB].txtexisted. What would be the effect of runningls file{A,B}.txtunder these circumstances?Recall the discussion of escaping characters using single quotes, double quotes, and the backslash.

What would the output of

lsbe if we issued the following bash commands?$ touch "apple_file\ orange_file" $ touch "apple_file orange_file" $

How can we create the same files as in the previous problem without any quotes? Hint: This requires some careful thought about how to apply the backslash notation. Would single quotes have produced different output files if they were used instead of double quotes in the previous problem?

Pipes and Filters

Objectives

- Redirect a command's output to a file.

- Process a file instead of keyboard input using redirection.

- Construct command pipelines with two or more stages.

- Explain what usually happens if a program or pipeline isn't given any input to process.

- Explain Unix's "small pieces, loosely joined" philosophy.

Duration: 20-30 minutes.

Lesson

Now that we know a few basic commands and have learned how

to use wildcards,

we can finally look at the shell's most powerful feature:

the ease with which it lets you combine existing programs in new ways.

We'll start with a directory called molecules

that contains six files describing some simple organic molecules.

The .pdb extension indicates that these files are in Protein Data Bank format,

a simple text format that specifies the type and position of each atom in the molecule.

$ ls molecules cubane.pdb ethane.pdb methane.pdb octane.pdb pentane.pdb propane.pdb $

Let's go into that directory with cd

and run the command wc *.pdb.

wc is the "word count" command;

it counts the number of lines, words, and characters in files:

$ cd molecules $ wc *.pdb 20 156 1158 cubane.pdb 12 84 622 ethane.pdb 9 57 422 methane.pdb 30 246 1828 octane.pdb 21 165 1226 pentane.pdb 15 111 825 propane.pdb 107 819 6081 total $

If we run wc -l instead of just wc,

the output shows only the number of lines per file:

$ wc -l *.pdb 20 cubane.pdb 12 ethane.pdb 9 methane.pdb 30 octane.pdb 21 pentane.pdb 15 propane.pdb 107 total $

We can also use -w to get only the number of words,

or -c to get only the number of characters.

Now, which of these files is shortest? It's an easy question to answer when there are only six files, but what if there were 6000? That's the kind of job we want a computer to do.

Our first step toward a solution is to run the command:

$ wc -l *.pdb > lengths

The > tells the shell to redirect

the command's output to a file

instead of printing it to the screen.

The shell will create the file if it doesn't exist,

or overwrite the contents of that file if it does.

(This is why there is no screen output:

everything that wc would have printed has gone into the file lengths instead.)

ls lengths confirms that the file exists:

$ ls lengths lengths $

We can now send the content of lengths to the screen using cat lengths.

cat stands for "concatenate":

it prints the contents of files one after another.

In this case,

there's only one file,

so cat just shows us what it contains:

$ cat lengths 20 cubane.pdb 12 ethane.pdb 9 methane.pdb 30 octane.pdb 21 pentane.pdb 15 propane.pdb 107 total $

Now let's use the sort command to sort its contents.

This does not change the file.

Instead,

it sends the sorted result to the screen:

$ sort lengths 9 methane.pdb 12 ethane.pdb 15 propane.pdb 20 cubane.pdb 21 pentane.pdb 30 octane.pdb 107 total $

We can put the sorted list of lines in another temporary file called sorted-lengths

by putting > sorted-lengths after the command,

just as we used > lengths to put the output of wc into lengths.

Once we've done that,

we can run another command called head to get the first few lines in sorted-lengths:

$ sort lengths > sorted-lengths $ head -1 sorted-lengths 9 methane.pdb $

Giving head the parameter -1 tells us we only want the first line of the file;

-20 would get the first 20, and so on.

Since sorted-lengths the lengths of our files ordered from least to greatest,

the output of head must be the file with the fewest lines.

If you think this is confusing, you're in good company:

even once you understand what wc, sort, and head do,

all those intermediate files make it hard to follow what's going on.

How can we make it easier to understand?

Let's start by getting rid of the sorted-lengths file

by running sort and head together:

$ sort lengths | head -1 9 methane.pdb $

The vertical bar between the two commands is called a pipe. It tells the shell that we want to use the output of the command on the left as the input to the command on the right. The computer might create a temporary file if it needs to, or copy data from one program to the other in memory, or something else entirely: we don't have to know or care.

Well, if we don't need to create the temporary file sorted-lengths,

can we get rid of the lengths file too?

The answer is "yes":

we can use another pipe to send the output of wc directly to sort,

which then sends its output to head:

$ wc -l *.pdb | sort | head -1 9 methane.pdb $

This is exactly like a mathematician nesting functions like sin(πx)2

and saying "the square of the sine of x times π":

in our case, the calculation is "head of sort of word count of *.pdb".

Inside Pipes

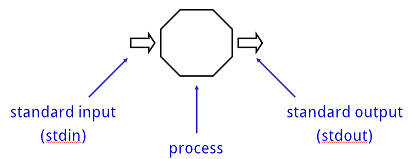

Here's what actually happens behind the scenes when we create a pipe. When a computer runs a program—any program—it creates a process in memory to hold the program's software and its current state. Every process has an input channel called standard input. (By this point, you may be surprised that the name is so memorable, but don't worry: most Unix programmers call it stdin.) Every process also has a default output channel called standard output, or stdout (Figure 11).

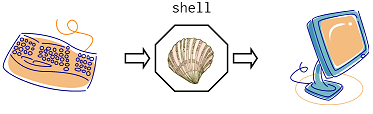

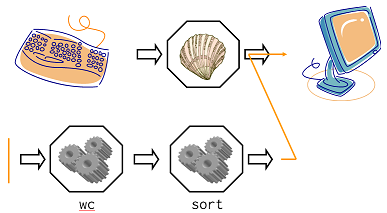

The shell is just another program, and runs in a process like any other. Under normal circumstances, whatever we type on the keyboard is sent to the shell on its standard input, and whatever it produces on standard output is displayed on our screen (Figure 12):

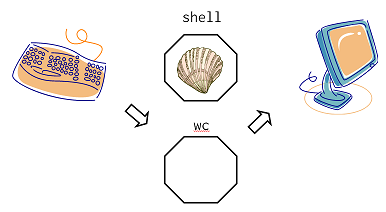

When we run a program, the shell creates a new process. It then temporarily sends whatever we type on our keyboard to that process's standard input, and whatever the process sends to standard output to the screen (Figure 13):

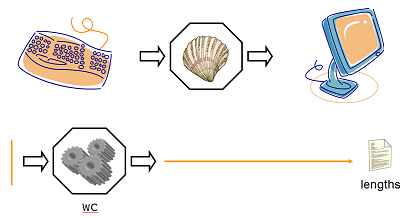

Here's what happens when we run wc -l *.pdb > lengths.

The shell starts by telling the computer to create a new process to run the wc program.

Since we've provided some filenames as parameters,

wc reads from them instead of from standard input.

And since we've used > to redirect output to a file,

the shell connects the process's standard output to that file

(Figure 14).

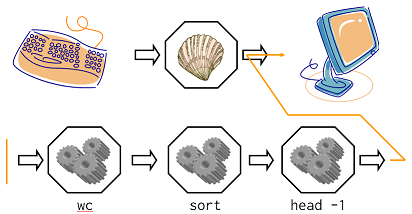

If we run wc -l *.pdb | sort instead,

the shell creates two processes,

one for each process in the pipe,

so that wc and sort run simultaneously.

The standard output of wc is fed directly to the standard input of sort;

since there's no redirection with >, sort's output goes to the screen

(Figure 15):

And if we run wc -l *.pdb | sort | head -1,

we get the three processes shown in Figure 16

with data flowing from the files,

through wc to sort,

and from sort through head to the screen.

This simple idea is why Unix has been so successful. Instead of creating enormous programs that try to do many different things, Unix programmers focus on creating lots of simple tools that each do one job well, and work well with each other. Ten such tools can be combined in 100 ways, and that's only looking at pairings: when we start to look at pipes with multiple stages, the possibilities are almost uncountable.

This programming model is called pipes and filters. We've already seen pipes; a filter is a program that transforms a stream of input into a stream of output. Almost all of the standard Unix tools can work this way: unless told to do otherwise, they read from standard input, do something with what they've read, and write to standard output.

The key is that any program that reads lines of text from standard input, and writes lines of text to standard output, can be combined with every other program that behaves this way as well. You can and should write your programs this way, so that you and other people can put those programs into pipes to multiply their power.

Redirecting Input

As well as using > to redirect a program's output,

we can use < to redirect its input,

i.e.,

to read from a file instead of from standard input.

For example, instead of writing wc ammonia.pdb,

we could write wc < ammonia.pdb.

In the first case,

wc gets a command line parameter telling it what file to open.

In the second,

wc doesn't have any command line parameters,

so it reads from standard input,

but we have told the shell to send the contents of ammonia.pdb to wc's standard input.

Nelle's Pipeline: Checking Files

Nelle has run her samples through the assay machines

and created 1520 files in the north-pacific-gyre/2012-07-03 directory

described earlier.

As a quick sanity check,

she types:

$ cd north-pacific-gyre/2012-07-03 $ wc -l *.txt

The output is 1520 lines that look like this:

300 NENE01729A.txt 300 NENE01729B.txt 300 NENE01736A.txt 300 NENE01751A.txt 300 NENE01751B.txt 300 NENE01812A.txt ... ...

Now she types this:

$ wc -l *.txt | sort | head -5

240 NENE02018B.txt

300 NENE01729A.txt

300 NENE01729B.txt

300 NENE01736A.txt

300 NENE01751A.txt

Whoops: one of the files is 60 lines shorter than the others. When she goes back and checks it, she sees that she did that assay at 8:00 on a Monday morning—someone was probably in using the machine on the weekend, and she forgot to reset it. Before re-running that sample, she checks to see if any files have too much data:

$ wc -l *.txt | sort | tail -5

300 NENE02040A.txt

300 NENE02040B.txt

300 NENE02040Z.txt

300 NENE02043A.txt

300 NENE02043B.txt

Those numbers look good—but what's that 'Z' doing there in the third-to-last line? All of her samples should be marked 'A' or 'B'; by convention, her lab uses 'Z' to indicate samples with missing information. To find others like it, she does this:

$ ls *Z.txt

NENE01971Z.txt NENE02040Z.txt

Sure enough,

when she checks the log on her laptop,

there's no depth recorded for either of those samples.

Since it's too late to get the information any other way,

she must exclude those two files from her analysis.

She could just delete them using rm,

but there are actually some analyses she might do later

where depth doesn't matter,

so instead,

she'll just be careful later on to select files using the wildcard expression

*[AB].txt.

As always, the '*' matches any number of characters,

and recall from the previous section that [AB]

matches either an 'A' or a 'B',

so this matches all the valid data files she has.

Summary

command > fileredirects a command's output to a file.first | secondis a pipeline: the output of the first command is used as the input to the second.- The best way to use the shell is to use pipes to combine simple single-purpose programs (filters).

catdisplays the contents of its inputs.headdisplays the first few lines of its input.sortsorts its inputs.taildisplays the last few lines of its input.wccounts lines, words, and characters in its inputs.

Challenges

If we run

sorton each of the files shown on the left in the table below, without the-nflag, the output is as shown on the right:1 10 2 19 22 6

1 10 19 2 22 6

1 10 2 19 22 6

1 2 6 10 19 22

Explain why we get different answers for the two files.

What is the difference between:

wc -l < *.datand:

wc -l *.datThe command

uniqremoves adjacent duplicated lines from its input. For example, if a filesalmon.txtcontains:coho coho steelhead coho steelhead steelhead

then

uniq salmon.txtproduces:coho steelhead coho steelhead

Why do you think

uniqonly removes adjacent duplicated lines? (Hint: think about very large data sets.) What other command could you combine with it in a pipe to remove all duplicated lines?A file called

animals.txtcontains the following data:2012-11-05,deer 2012-11-05,rabbit 2012-11-05,raccoon 2012-11-06,rabbit 2012-11-06,deer 2012-11-06,fox 2012-11-07,rabbit 2012-11-07,bear

Fill in the table showing what lines of text pass through each pipe in the pipeline below.

cat animals.txt|head -5|tail -3|sort -r>final.txtThe command:

$ cut -d , -f 2 animals.txtproduces the following output:

deer rabbit raccoon rabbit deer fox rabbit bearWhat other command(s) could be added to this in a pipeline to find out what animals have been seen (without any duplicates in the animals' names)?

Summary

The Unix shell is older than most of the people who use it. It has survived so long because it is one of the most productive programming environments ever created—maybe even the most productive. Its syntax may be cryptic, but people who have mastered it can experiment with different commands interactively, then use what they have learned to automate their work. Graphical user interfaces may be better at the first, but the shell is still unbeaten at the second. And as Alfred North Whitehead wrote in 1911, "Civilization advances by extending the number of important operations which we can perform without thinking about them."